GitHub - google/jax: Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more - GitHub - google/jax: Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

Reimplementing Unirep in JAX

Information, Free Full-Text

jax · GitHub Topics · GitHub

JAX performance can be better (comparison with PyTorch) · Issue #1832 · google/jax · GitHub

From PyTorch to JAX: towards neural net frameworks that purify stateful code — Sabrina J. Mielke

GitHub - google/jax: Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

GitHub - google/jax: Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

Learn how to leverage JAX and TPUs to train neural networks at a significantly faster speed

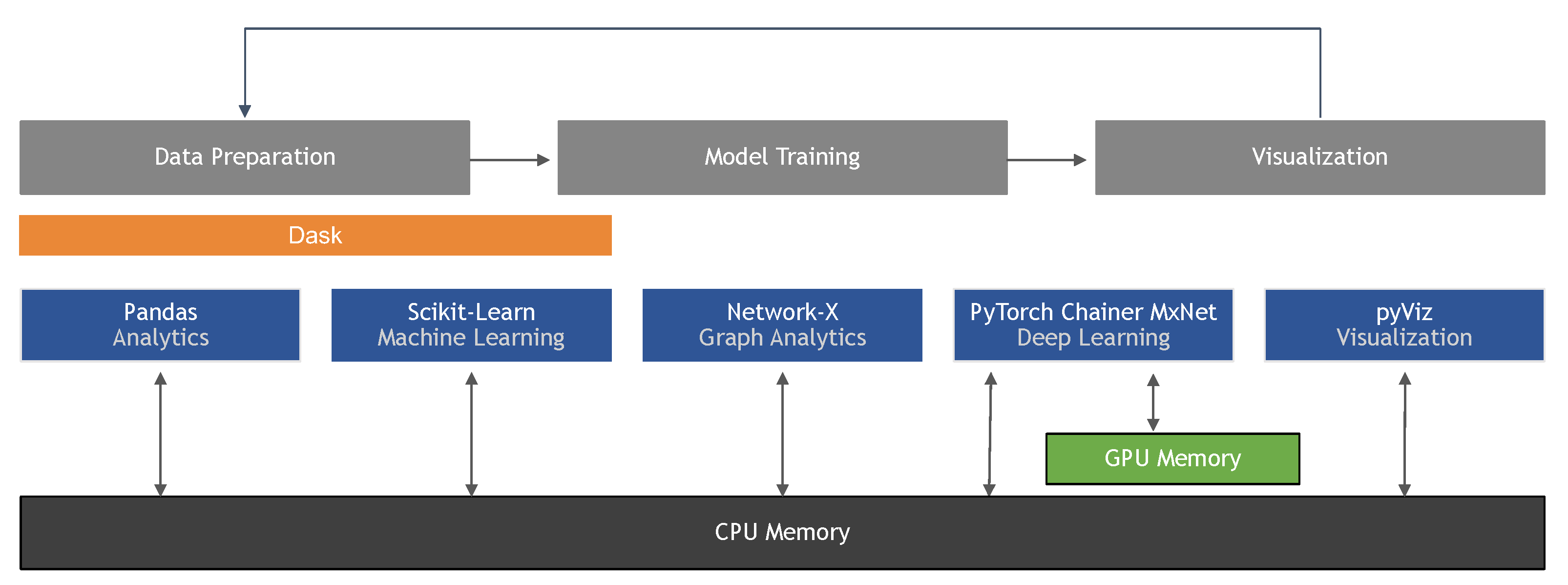

10 Python Libraries for Machine Learning You Should Try Out in 2023!